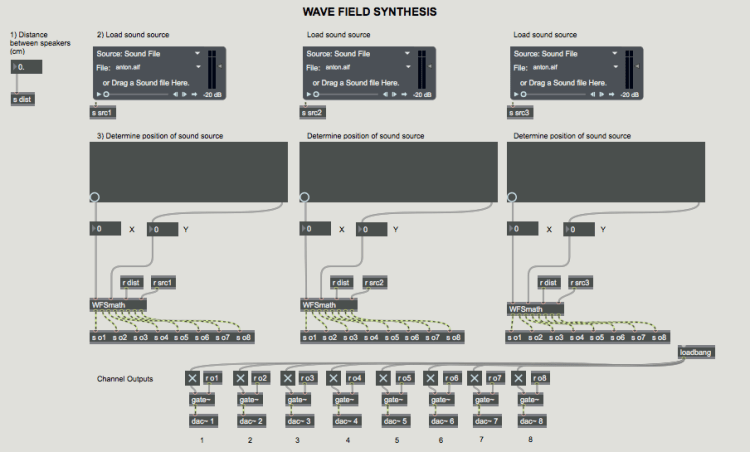

With the help of team members John Sloan and Harrison Holt, I built a Max/MSP/Jitter patch that uses Wave Field Synthesis (WFS) to spatialize audio over a linear array of speakers. We successfully tested the patch over an 8 speaker array in NYU’s spatial audio research lab.

Wave Field Synthesis (WFS) is a spatial audio rendering technique where an array of closely spaced speakers produces an artificial wavefront that represents the spatialization of a virtual source. Unlike the traditional spatialization techniques of stereo or surround sound, the localization of virtual sources using WFS does not depend on or change with the listener’s position.

Stereo vs. WFS

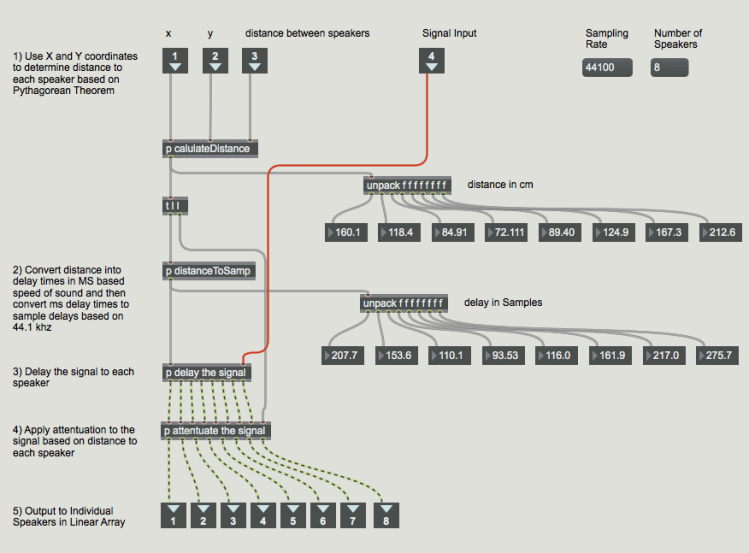

Stereo or surround sound panning primarily uses changes in amplitude between speakers to create a perceived “phantom image” of a spatialized source. For proper effect, however, the listener must be positioned in a “sweet spot” between the speakers. Wave Field Synthesis, on the other hand, uses small changes in delay and amplitude along an array of speakers to synthesize a wavefront, which then conveys the spatialization of a source consistently for any position in front of the speaker array. (learn more on the WFS wiki page)

The Max patch we built calculates the necessary delay and amplitude differences between speakers to create a wavefront for up to three spatialized virtual sources. You can view samples of the patch or download it below.

Inside the WFSmath object:

If you want to see the nitty gritty math stuff inside the sub patchers or try out the patch yourself, you can download it below.

Other programming projects: